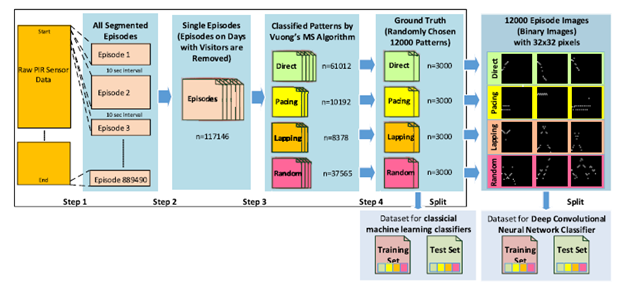

Single resident life style is increasing among the elderly due to the issues of elderly care cost and privacy invasion. However, the single life style cannot be maintained if they have dementia. Thus, the early detection of dementia is crucial. Systems with wearable devices or cameras are not preferred choice for the long-term monitoring. Main intention of this paper is to propose deep convolutional neural network (DCNN) classifier for indoor travel patterns of elderly people living alone using open data set collected by device-free non-privacy invasive binary (passive infrared) sensor data. Travel patterns are classified as direct, pacing, lapping, or random according to Martino–Saltzman (MS) model. MS travel pattern is highly related with person’s cognitive state, and thus can be used to detect early stage of dementia. We have utilized an open data set that was presented by Center for Advanced Studies in Adaptive Systems project, Washington State University. The data set was collected by monitoring a cognitively normal elderly person by wireless passive infrared sensors for 21 months. First, 117 320 travel episodes are extracted from the data set and classified by MS travel pattern classifier algorithm for the ground truth. Later, 12 000 episodes (3000 for each pattern) were randomly selected from the total episodes to compose training and testing data set. Finally, DCNN performance was compared with seven other classical machine-learning classifiers. The random forest and DCNN yielded the best classification accuracies of 94.48% and 97.84%, respectively. Thus, the proposed DCNN classifier can be used to infer dementia through travel pattern matching.

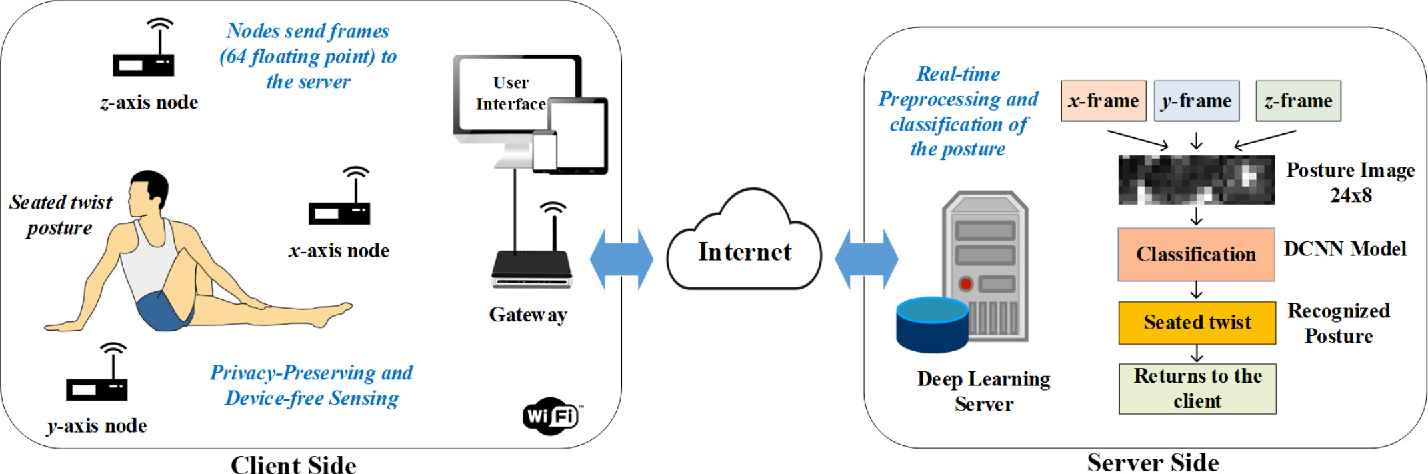

In recent years, the number of yoga practitioners has been drastically increased and there are more men and older people practice yoga than ever before. Internet of Things (IoT)-based yoga training system is needed for those who want to practice yoga at home. Some studies have proposed RGB/Kinect camera-based or wearable device-based yoga posture recognition methods with a high accuracy; however, the former has a privacy issue and the latter is impractical in the long-term application. Thus, this paper proposes an IoT-based privacy-preserving yoga posture recognition system employing a deep convolutional neural network (DCNN) and a low-resolution infrared sensor based wireless sensor network (WSN). The WSN has three nodes (x, y, and z-axes) where each integrates 8×8 pixels’ thermal sensor module and a Wi-Fi module for connecting the deep learning server. We invited 18 volunteers to perform 26 yoga postures for two sessions each lasted for 20 s. First, recorded sessions are saved as .csv files, then preprocessed and converted to grayscale posture images. Totally, 93 200 posture images are employed for the validation of the proposed DCNN models. The tenfold cross-validation results revealed that F1-scores of the models trained with xyz (all 3-axes) and y (only y-axis) posture images were 0.9989 and 0.9854, respectively. An average latency for a single posture image classification on the server was 107 ms. Thus, we conclude that the proposed IoT-based yoga posture recognition system has a great potential in the privacy-preserving yoga training system.

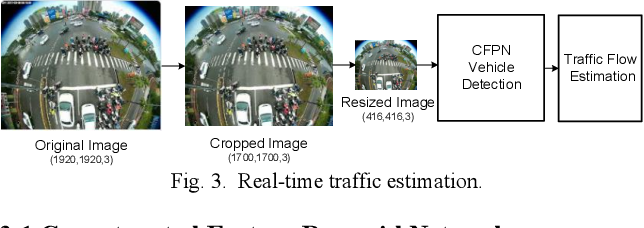

Real-time embedded traffic flow estimation (RETFE) systems need accurate and efficient vehicle detection models to meet limited resources in budget, dimension, memory, and computing power. In recent years, object detection became a less challenging task with latest deep CNN-based state-of-the-art models, i.e., RCNN, SSD, and YOLO; however, these models cannot provide desired performance for RETFE systems due to their complex time-consuming architecture. In addition, small object (<30x30 pixels) detection is still a challenging task for existing methods. Thus, we propose a shallow model named Concatenated Feature Pyramid Network (CFPN) that inspired from YOLOv3 to provide above mentioned performance for the smaller object detection. Main contribution is a proposed concatenated block (CB) which has reduced number of convolutional layers and concatenations instead of time consuming algebraic operations. The superiority of CFPN is confirmed on the COCO and an in-house CarFlow datasets on Nvidia TX2. Thus we conclude that CFPN is useful for real-time embedded smaller object detection task.

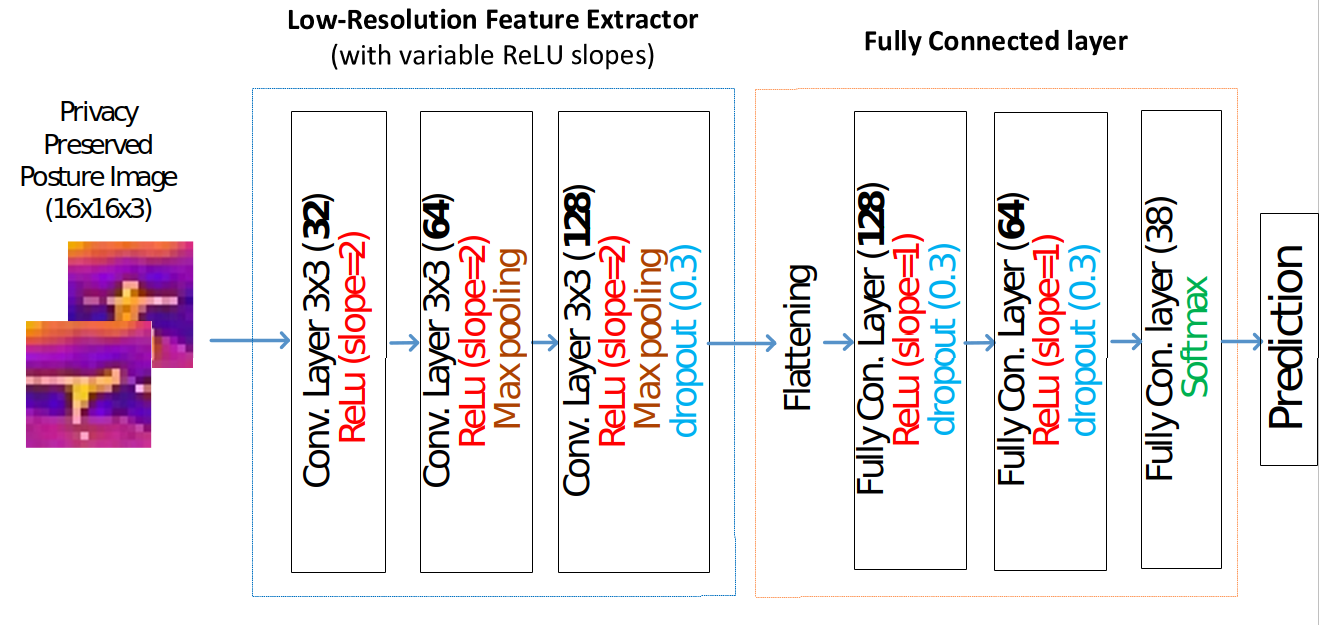

Implementation of our ICIP 2020 paper "Lownet: privacy preserved Ultra-Low Resolution Posture Image Classification" In this project, we created LowNet architecture, which is suitable for low resolution image classification. We are releasing TIP38(Thermal Image Posture 38 class) yoga posture image dataset captured by infrared camera. We propose "Lownet" model with relu activation fucntions that have variable slopes.

It is complicated for the people with speech impairment to make a relationship with social majority, which naturally demands an automatic sign language translation systems. There are plenty of proposed methods and datasets for English sign language, but it is very limited for Arabic sign language. Therefore, we present our collected and labeled Arabic Sign Language Alphabet Dataset (ASLAD) consisting of 14400 images of 32 signs (offered here) collected from 50 people. We provide the analysis of performance of state-of-theart object detection models on ASLAD. Moreover, the successful real-time detection of Arabic signs are achieved on the sample videos from YouTube. Finally, we believe, ASLAD will offer an opportunity to experiment and develop automated systems for the people with hearing disabilities using computer vision and deep learning models.

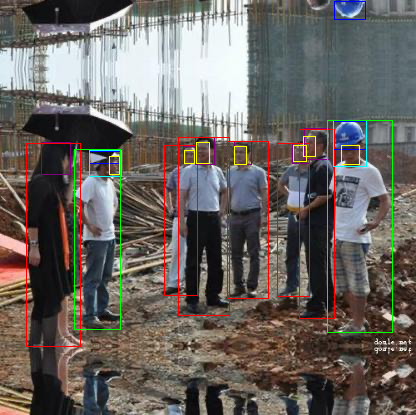

It is important to have an automatic system to check if the workers/visitors are wearing the safety helmet to reduce fatalities and injuries at construction sites and factories. However, there are not many or sufficient dataset for safety helmet detection, except the one dataset named ”Safety Helmet Detection” in Kaggle. The dataset has 5000 images with 3 object labels (person, helmet, head), but most of the images are incompletely labeled. To create a good real-time safety helmet detection model, we added 3 more objects and labeled all 5000 images (offered here). The experimental results show significant improvements in mAPs, comparison results and discussion are provided on YOLOv3, YOLOv4 and YOLOv5 models. Moreover, real-time safety helmet detection is successfully achieved on sample videos from YouTube.

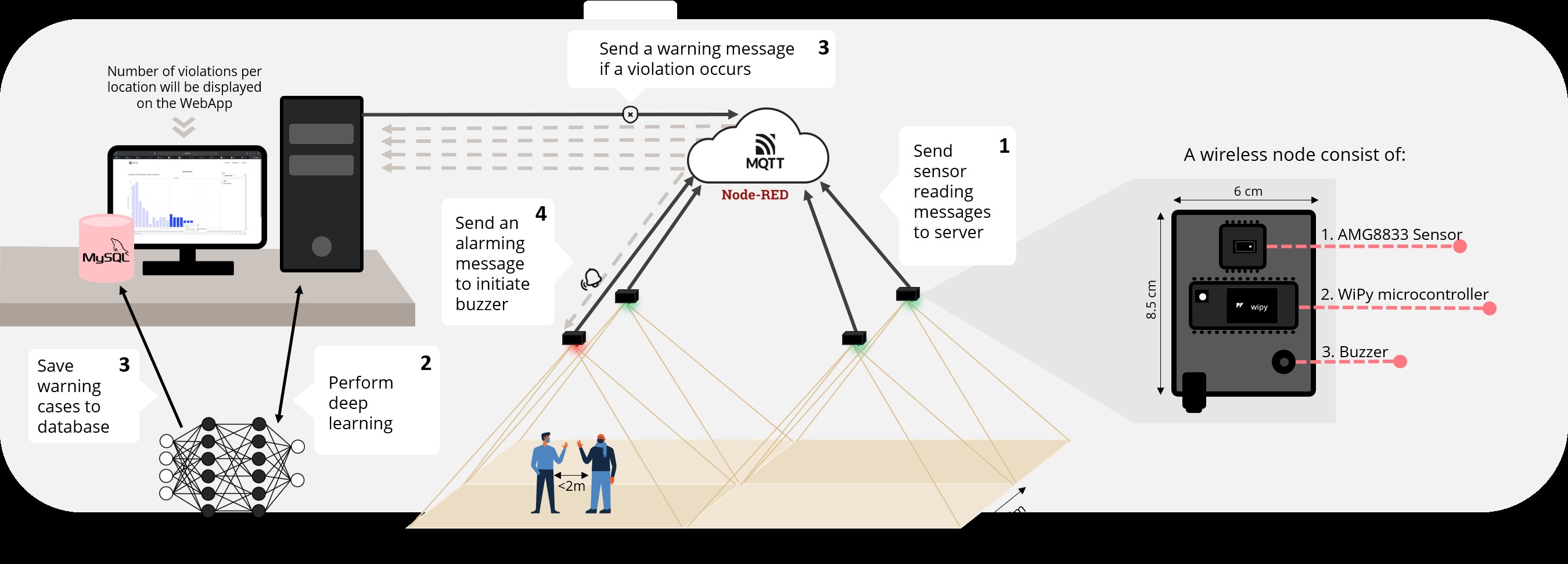

During the current COVID-19 pandemic, it was obvious that life slowed down, and people became less productive with remote working through online platforms. It has become a necessity to find solutions to facilitate our daily routines such as going to school, work and travel while abiding by the guideline for social distancing and cope with the existence of COVID-19 or any upcoming health pandemics. We employed an IoT and deep learning method to propose an indoor privacy-preserved social distancing wireless sensor network-based system using 8×8 low-resolution infrared sensor AMG8833, which can be used by companies and organizations to ensure that social distancing is practiced at all time. To the best of our knowledge, there is no practical privacy-preserved technology in the market. We collected a total of 6606 infrared low-resolution images using four wireless sensor nodes to cover as many as 200 cases. We employed YOLOv4-tiny object detection model for detecting persons which is trained on our dataset, and achieved mAP@0.5 equal to 95.4% and inference time of 5.16ms. Thus, we conclude our proposed novel social distancing system has a potential in fighting COVID19.

An application of a fish-eye camera on embedded real-time vehicle detection and tracking tasks has risen in recent years due to its wide coverage area compared to a traditional camera. We propose to a) collect fish-eye videos and create UAE featured fish-eye traffic dataset, and b) Recursively Fused Feature Networks (RFFN) for small vehicle detection using CNNs. Expected results would be: 1 patent, 2 international conference papers and 1-2 international SCIindexed journal papers, open UAE fish-eye traffic dataset which will be a big contribution in computer vision and deep learning research field. Moreover, vehicle tracking will be done by employing DeepSORT model, then we can estimate traffic flow, accidents, violation. Furthermore, we can predict pending accidents of vehicles and pedestrians crossing the road and can prevent by controlling the traffic signal or alarming the pedestrians.

The number of elderly people living alone at home without any supervision of a caregiver is rapidly increasing worldwide. Indoor tracking is very useful for detecting activities of daily living (ADLs) and accidental falls so that long-term care and emergency actions can be taken if needed. We will develop a novel system that (1) doesn’t require the elderly to wear anything (device-free), (2) non-privacy invasive (no camera) since we use binary sensors and an infrared sensor array, which integrates an 8x8 ultra-low resolution (AMG8833), (3) can detect motionless subject, and track elderly with (4) a high accuracy (>50 cm). The main anticipated outcomes are: (1) a novel accurate device-free non-privacy invasive indoor tracking system for elderly living alone, (2) design of temporal and spatial deep learning classifier for accidental falls, and (3) families and the government can save large amount of budget by applying this system instead of hiring full-time caregivers.

Monitoring students’ attention levels in the classroom is a key factor of consideration in the teaching process. Instructors try to monitor and maintain a high attention level for each student throughout the class session. Recent developments in computer vision techniques provide tools that allow for real-time head-pose estimation for multiple people. We present a novel and simple real-time attention assessment system for use in classrooms based on head pose esti-mation. We believe this work could be of great benefit to enhance both the instructors’ teaching abilities moreover, the students’ academic performances.

Several studies have demonstrated the beneficial effects of brain training exercises on cognitive function. However, none of these studies was conducted in Arabic-speaking population. We in collaboration with colleagues from Tohoku University and other research institute in Taiwan and Australia developed intelligent computer-/robotic-based cognitive training exercises that will be evaluated in healthy Emirati elderly. Along with the study, autonomous cognitive assessment technique for Emirati elderly will be also investigated.

Our aim is to design and develop a prototype of a device for post-stroke patients suffering from Foot Drop. This simulator device will help to improve gait abilities and help them to enhance their movement by assisting the dorsiflexion of ankles for foot clearance in the swing phase and the occurrence of foot slap at initial contact can be minimized.

Our target in this research is to build an easy use Rehabilitation and Assistive device

for post stroke Patients.We are proud that the initial prototype of the robot arm were short-listed for the

Robot4good competition in Dubai, UAE 2017.

A summary of the prototype and its working mechanism could be viewed in the following

link (presentation time 00:10:00~00:20:00)

Videos and Media Highlights

Videos and Media Highlights

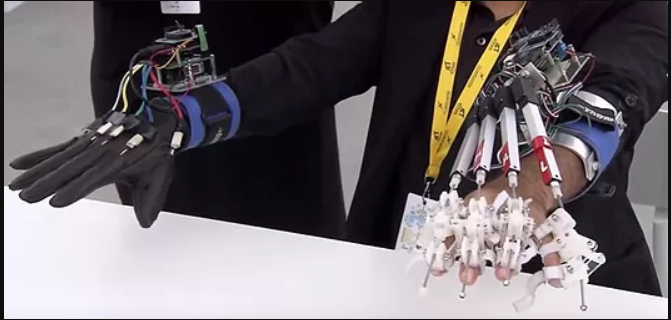

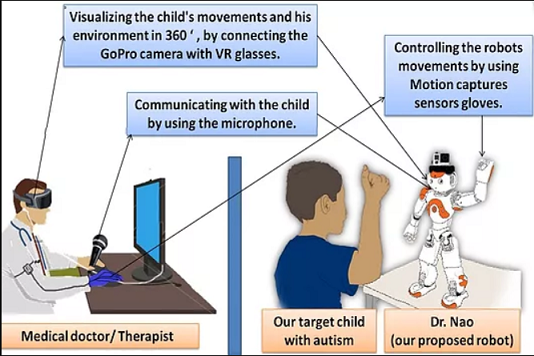

Autism is a neurodevelopmental disorder characterized by severe deficiency in social interaction and communication skills. In this research direction, we are investigating the response of Autistic Children to a robotic-avatar therapeutic system uniquely designed and developed in our lab. Our system will be compared to a pre-programmed known robots designed to interact with autistic child, as well as, the conventional interactive therapy conducted by a therapist. Besides building the Avatar-robot, we are also working in developing an automate scoring system that measures the overall progress of interaction between the robot and the Child. The avatar robot can replicate the therapist’s presence, mimicking his/her motion, transferring his/her voice, as well as, delivering feedback to the therapist with visuals and audio from the child environment.

The extension of the avatar-robot work has been also presented for Keep the user away from dangerous things:

Videos and Media Highlights

http://www.thenational.ae/uae/technology/

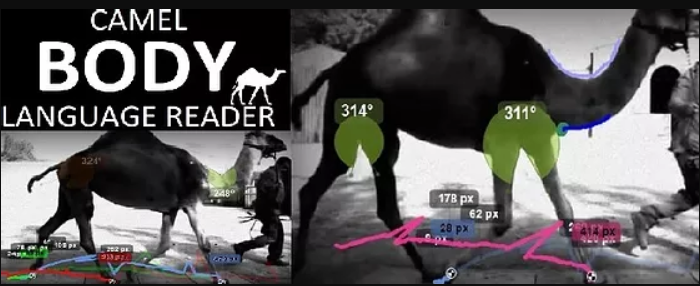

Camels are considered a key figure in the heritage of the UAE. This research targets understanding Camel behaviors and various moods. The reason for this study is to decrease the possibilities for post-race camel death incidents, as well as, build a communication mechanism between the camel and the user. This research study consists of three different stages: Stage 1: (collecting data): collecting various Camel's body behaviors using a 3D high quality camera (KINECT), and map these data in a simple camel model for further analysis. Stage 2: (Mapping data and deep learning). Stage 3: Building online expert device.

This work was shortlisted for the show in think science competition 2017.

Videos and Media Highlights

Link 1- https://www.uaeu.ac.ae/en/news/2017/may/camelreader.shtml

Link 2- http://www.alittihad.ae/details.php?id=48223&y=2017

Thank you for your interest, For further information on the UAEU - Industry 4.0, please contact the following: